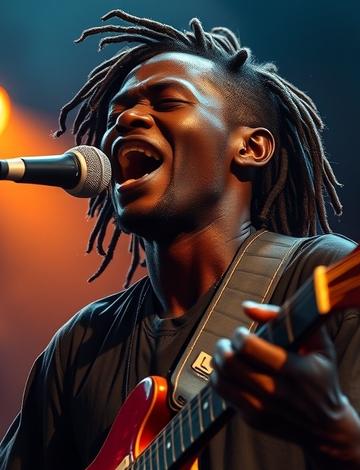

Celebrating the Life and Legacy of Amadou Bagayoko the Musical Icon

Stylish Alternatives to Ballet Flats You Need to Try

Trump and Netanyahu's White House Meeting Sparks Controversy Over Tariff Relief and Hostage Discussions

A High-Stakes Meeting: Trump and Netanyahu at the White House In a significant diplomatic event, former President Donald Trump met with Israeli Prime Minister Benjamin Netanyahu at the White House. This meeting, which has drawn considerable attention, primarily focused on Israel's request for tariff…

Is Age Just a Number for Rock Legends Who Refuse to Fade Away

The Unstoppable Spirit of Rock Legends In the world of rock music, age often seems like just a number. Many legendary artists continue to perform and create music well into their golden years, defying the conventional wisdom that suggests it's time to retire. This phenomenon raises an intriguing…

Celebrating the Life and Legacy of Amadou Bagayoko the Musical Icon

A Tribute to Amadou Bagayoko's Musical Journey The world of music has lost a shining star with the passing of Amadou Bagayoko, one half of the celebrated duo Amadou & Mariam. Known for their unique blend of Malian music and global influences, Amadou and Mariam captured hearts worldwide. Their…

What Happened When Morgan Wallen Left SNL Abruptly Will Shock You

The Shocking Exit of Morgan Wallen from SNL In a surprising turn of events, country music star Morgan Wallen made headlines after his abrupt exit from Saturday Night Live (SNL). This incident has left fans and viewers buzzing with questions. What could have led to such a sudden departure? Was it a…

Are Meta's AI Model Benchmarks Misleading You

Understanding Meta's AI Model Benchmarks In the rapidly evolving world of artificial intelligence, benchmarks play a crucial role in evaluating the performance of AI models. Recently, Meta has released new benchmarks for its AI models, but the accuracy and reliability of these benchmarks have raised…

How Cloudflare Uses AI to Create a Maze of Irrelevant Facts

The Intriguing Intersection of AI and Internet Security In the rapidly evolving world of technology, the intersection of artificial intelligence (AI) and internet security is becoming increasingly significant. Cloudflare, a prominent player in this arena, has taken a unique approach by using AI to…

Can You Believe Microsoft Copilot Created a Free AI Shooter Game

Introduction to AI-Generated Gaming Imagine a world where artificial intelligence not only assists you in daily tasks but also creates entire video games. Microsoft Copilot has made this dream a reality by generating an AI version of one of the most iconic shooter games of all time. Yes, you read…

Meet Claude Your New AI Study Companion for College Success

April 06, 2025

3 min

Microsoft Copilot Now Can Complete Web Tasks on Its Own

The New Era of Automation with Microsoft Copilot In a groundbreaking development, Microsoft has announced that its Copilot feature can now autonomously complete web tasks. This advancement marks a significant leap in the realm of automation and artificial intelligence, allowing users to streamline…

Is This the Future of Finance You Never Expected

The Evolution of Financial Markets In recent years, the landscape of finance has undergone a seismic shift. The rise of technology has transformed how we invest, save, and manage our money. But what does this mean for the future? Are we witnessing a revolution that will redefine our financial…

What You Need to Know About the Latest Trends in Finance

Understanding the Current Financial Landscape In today's rapidly evolving financial environment, staying informed about the latest trends is crucial for both investors and consumers. The finance sector is witnessing significant changes driven by technology, regulatory shifts, and global economic…

Why the Nintendo Switch 2 Might Be the Most Unexciting Yet Essential Console

The Anticipation for Nintendo Switch 2 As gaming enthusiasts eagerly await the release of the Nintendo Switch 2, it's hard not to feel a mix of excitement and skepticism. Will this new console live up to the legacy of its predecessor, or will it disappoint? The Nintendo Switch has been a game…

Maximize Your Carry-On: Essential Items for Smart Travelers

The Ultimate Guide to Maximizing Your Carry-On Luggage Traveling can be a thrilling experience, but it often comes with the challenge of packing efficiently. Whether you're heading out for a weekend getaway or a long-haul flight, knowing how to maximize your carry-on luggage can make all the…

Navigating Thailand's Hidden Travel Etiquette

Understanding Thailand's Unique Travel Culture Traveling to Thailand offers a vibrant tapestry of experiences, from bustling markets to serene temples. However, to truly appreciate this beautiful country, it's essential to understand its unique travel etiquette. Here are some unwritten rules that…

Unveiling the Best Hotels in Punta Cana for Your Dream Getaway

Introduction to Punta Cana's Luxurious Retreats Punta Cana, a stunning paradise located in the Dominican Republic, is renowned for its pristine beaches, crystal-clear waters, and luxurious resorts. If you're planning a getaway to this tropical haven, you're in for a treat. But with so many options…

Stylish Alternatives to Ballet Flats You Need to Try

Discovering New Footwear Trends Ballet flats have long been a staple in many wardrobes, celebrated for their comfort and versatility. However, as fashion evolves, so do our choices. If you're looking to switch things up, there are plenty of stylish alternatives to ballet flats that can elevate your…

Explore the Latest Trendy Blouses from Zara That Will Elevate Your Wardrobe

Discover Zara's Chic Blouses That Are Taking Over Fashion If you're looking to refresh your wardrobe, Zara's latest collection of blouses is a must-see. These stylish pieces are not just clothing; they are statements that can elevate any outfit. From casual outings to formal events, Zara's blouses…

What to Expect from 1923 Season 2 Release Schedule

The Anticipation for 1923 Season 2 Fans of the acclaimed series "1923" have been eagerly awaiting the next chapter in this gripping tale. The show, which has captivated audiences with its rich storytelling and complex characters, is set to return for its second season. But what can viewers expect…

Ranking the Most Intriguing Characters from The White Lotus

Introduction to The White Lotus Phenomenon The White Lotus has captivated audiences with its sharp social commentary and complex characters. This HBO series, created by Mike White, delves into the lives of wealthy vacationers at a luxury resort, exposing their flaws and the societal issues they…

How to Effectively Treat Dry Skin on Your Eyelids

Understanding Dry Skin on Eyelids Dry skin on the eyelids can be both uncomfortable and unsightly. This condition is often caused by environmental factors, allergies, or even the products we use daily. If you’ve ever experienced tightness, flakiness, or irritation around your eyes, you’re not alone…

Indigenous Women's Reproductive Rights Are Under Threat What You Need to Know

Understanding the Current Landscape of Indigenous Women's Reproductive Rights In recent years, the conversation surrounding reproductive rights has gained significant traction, particularly concerning Indigenous women. The intersection of culture, health, and policy creates a complex landscape that…

Legal Stuff